Appeals court rules TikTok could be responsible for algorithm that recommended fatal strangulation game to child

TikTok cannot use federal law "to permit casual indifference to the death of a ten-year-old girl," a federal judge wrote this week. And with that, an appeals court has opened a Pandora's box that might clear the way for Big Tech accountability.

Silicon Valley companies have become rich and powerful in part because federal law has shielded them from liability for many of the terrible things their tools enable and encourage -- and, it follows, from the expense of stopping such things. Smartphones have poisoned our children's brains and turned them into The Anxious Generation; social media and cryptocurrency have enabled a generation of scam criminals to rob billions from our most vulnerable people; advertising algorithms tap into our subconscious in an attempt to destroy our very agency as human beings. To date, tech firms have made only passing attempts to stop such terrible things, emboldened by federal law which has so far shielded them from liability ... even when they "recommend" that kids do things which lead to death.

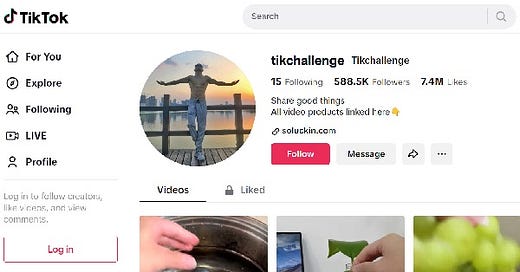

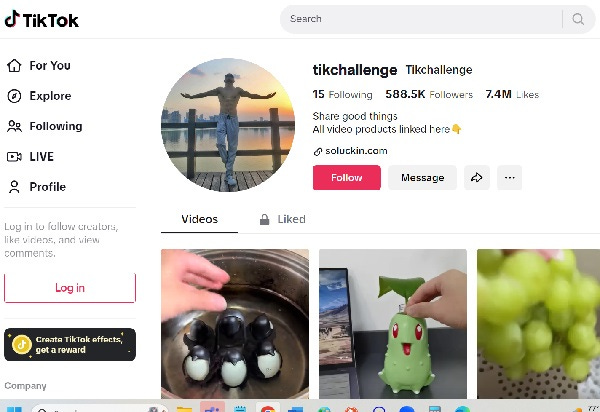

That's what happened to 10-year-old Tawainna Anderson, who was served a curated "blackout challenge" video by Tiktok on a personalized "For You" page back in 2021. She was among a series of children who took up that challenge and experimented with self-asphyxiation -- and died. When Anderson's parents tried to sue TikTok, a lower court threw out the case two years ago, saying tech companies enjoy broad immunity because of the 1996 Communications Decency Act, and its Section 230.

You've probably heard of that. Section 230 has been used as a get-out-of-jail-free card by Big Tech for decades; it's also been used as an endless source of bar fights among legal scholars.

But now, with very colorful language, a federal appeals court has revived the Anderson family lawsuit and thrown Section 230 protection into doubt. Third Circuit Judge Paul Matey's concurring opinion seethes at the idea that tech companies aren't required to stop awful things from happening on their platforms, even when it's obvious that they could. He also takes a shot at those who seem to care more about the scholarly debate than about the clear and present danger facilitated by tech tools. It's worth reading this part of the ruling in full.

TikTok reads Section 230 of the Communications Decency Act... to permit casual indifference to the death of a ten-year-old girl. It is a position that has become popular among a host of purveyors of pornography, self-mutilation, and exploitation, one that smuggles constitutional conceptions of a “free trade in ideas” into a digital “cauldron of illicit loves” that leap and boil with no oversight, no accountability, no remedy. And a view that has found support in a surprising number of judicial opinions dating from the early days of dialup to the modern era of algorithms, advertising, and apps.. But it is not found in the words Congress wrote in Section 230, in the context Congress acted, in the history of common carriage regulations, or in the centuries of tradition informing the limited immunity from liability enjoyed by publishers and distributors of “content.” As best understood, the ordinary meaning of Section 230 provides TikTok immunity from suit for hosting videos created and uploaded by third parties. But it does not shield more, and Anderson’s estate may seek relief for TikTok’s knowing distribution and targeted recommendation of videos it knew could be harmful.

Later on, the opinion says, "The company may decide to curate the content it serves up to children to emphasize the lowest virtues, the basest tastes. But it cannot claim immunity that Congress did not provide."

The ruling doesn't tear down all Big Tech immunity. It makes a distinction between TikTok's algorithm specifically recommending a blackout video to a child after the firm knew, or should have known, that it was dangerous ..... as opposed to a child seeking out such a video "manually" through a self-directed search. That kind of distinction has been lost through years of reading Section 230 at its most generous from Big Tech's point of view. I think we all know where that has gotten us.

In the simplest of terms, tech companies shouldn't be held liable for everything their users do, no more than the phone company can be liable for everything callers say on telephone lines -- or, as the popular legal analogy goes, a newsstand can't be liable the content of magazines it sells.

After all, that newsstand has no editorial control over those magazines. Back in the 1990s, Section 230 added just a touch of nuance to this concept, which was required because tech companies occasionally dip into their users' content and restrict it. Tech firms remove illegal drug sales, or child porn, for example. While that might seem akin to the exercise of editorial content, we want tech companies to do this, so Congress declared such occasional meddling does not turn a tech firm from a newsstand into a publisher, so it does not assume additional liability because of such moderation -- it enjoys immunity.

This immunity has been used as permission for all kinds of undesirable activity. Using another mildly strained metaphor, a shopping mall would never be allowed to operate if it ignored massive amounts of crime going on in its hallways...let alone supplied a series of tools that enable elder fraud, or impersonation, or money laundering. But tech companies do that all the time. In fact, we know from whistleblowers like Frances Haugen that tech firms are fully aware their tools help connect anxious kids with videos that glorify anorexia. And they lead lonely and grief-stricken people right to criminals who are expert at stealing hundreds of thousands of dollars from them. And they allow ongoing crimes like identity theft to occur without so much as answering the phone from desperate victims like American service members who must watch as their official uniform portraits are used for romance scams.

Will tech companies have to change their ways now? Will they have to invest real money into customer service to stop such crimes, and to stop their algorithms from recommending terrible things? You'll hear that such an investment is an overwhelming demand. Can you imagine if a large social media firm was forced to hire enough customer service agents to deal with fraud in a timely manner? It might put the company out of business. In my opinion, that means it never had a legitimate business model in the first place.

This week's ruling draws an appropriate distinction between tech firms that passively host content which is undesirable and firms which actively promote such content via algorithm. In other words, algorithm recommendations are akin to editorial control, and Big Tech must answer for what their algorithms do. You have to ask: Why wouldn't these companies welcome that kind of responsibility?

The Section 230 debate will rage on. Since both political parties have railed against Big Tech, and there is appetite for change, it does seem like Congress will get involved. Good. Section 230 is desperate for an update. Just watch carefully to make sure Big Tech doesn't write its own rules for regulating the next era of the digital age. Because it didn't do so well with the current era.

If you want to read more, I'd recommend Matt Stoller's Substack post on the ruling.

The article about this is compelling. Maybe we can do something about this danger once and for all if congress looks different next year from how it looks today.

LIABILITY FOR HOLOCAUST PCR RNA IVERMECTIN FAMINE INJECTIONS +++ CARBON GENOCIDE CULT ………